Dealing with AI

The UK Government publication of its AI Opportunities Action Plan (Clifford & Department for Science Innovation and Technology, 2025) sets out an agenda primarily focussed on the growth opportunities enabled by AI.

Many of the recommendations require translation from the problematic context to implementations using specific AI technologies, such as generative pre-trained transformers (GPT), and are therefore all examples of where problematisation is inevitable. As Callon (1980) observes

“Problematisation culminates in configurations characterised by their relative singularity. There is not one single way of defining problems, identifying and organising what is certain, repressing what cannot be analysed.”

How then are these decisions to be enacted, the processes of deciding, in the operationalisation of this action plan? This question is difficult to answer as the action plan is just a set of recommendations, a “roadmap for government to capture the opportunities of AI to enhance growth and productivity and create tangible benefits for UK citizens.”

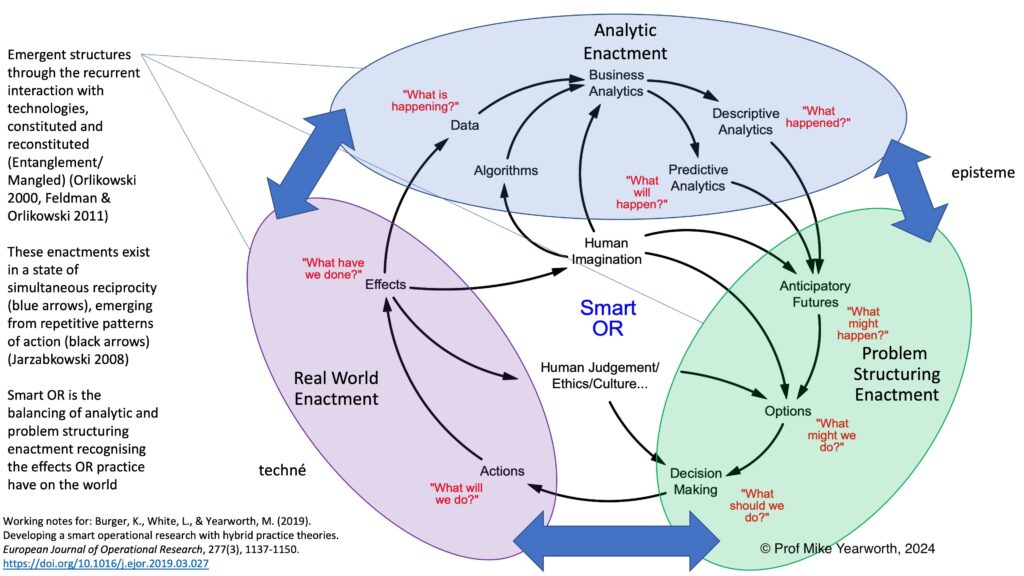

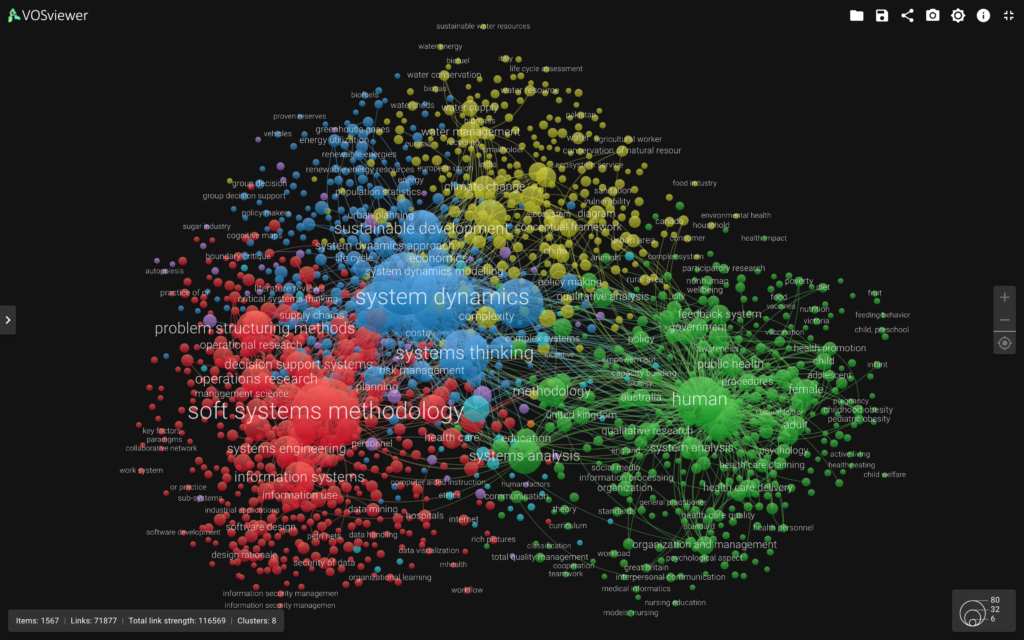

Roadmaps, like actions and solutions, present static nominalisations of what should be a dynamic process. The actual intervening in problematic areas such as health care, education and provision of services etc. will emerge from the normal processes of deliberating over any technology, not just AI. Restating Callon’s perspective, there will be an “abundance of problematisations” resulting from these deliberating processes leading to options where specific choices will need to be made. It is in the choosing between options, in the different ways in which interventions with AI technologies can be problematised, that the inevitable conflicts between different stakeholder needs, differing policy objectives, will be made clear. It is here that problem structuring as a deliberative process needs to operate.

“The usefulness of Callon’s work on problematisation is that it draws attention to the choices faced by an operational researcher when investigating a problem, and that there is not a single right answer to what is problematised. Whilst this supports the claims of Churchman, Ackoff and Checkland that these choices exist and that operational researchers are making conscious decisions about what to work on (and therefore what to ignore), it does not provide an answer to the problem of OR becoming practitioner-free, i.e. the problem of dealing with situational logics. The answer is found in the emergence of problem structuring methods (PSMs).” (Yearworth, 2025, pp. 13-14)

I was writing for Operational Researchers in my book, but the point is valid for any analyst or decision maker and their profession. Choices exist and conscious decisions need to be made about what to work on and what to ignore and these choices need to be made visible1 and debatable through formal processes – such as through the use of PSMs.

The situational logic that sits at the heart of the AI opportunities action plan is that choosing AI leads to (economic) growth. However, if we abrogate on our moral responsibility to make ethical choices and fall back on the simple rule-following of situational logics then we may as well hand the deliberation and implementation of an AI action plan to an AI itself2 and wash our hands of the consequences.

I recognise that the AI opportunities action plan makes specific reference to “[g]lobal leadership on AI safety and governance via the AI Safety Institute, and a proportionate, flexible regulatory approach” and reflects the fact that choices need to be made by overloading the use of “proportionate.” Deliberating and deciding over a flexible regulatory approach will require hard work, these will be (and should be) difficult choices. Given the scale of the challenges and opportunities of AI, apportioning sole agency for this deliberating and deciding to the AI Safety Institute (AISI) just narrows the location and scope of debate around problematisations to, in effect, informing decisions about the boundaries of regulation that are broadly pro-innovation. Deflecting focus away from this concentration of decision making by talking about assurance tools in an AI assurance ecosystem just sounds like marketing i.e., our attention on the situational logic in operation here is being misdirected by the AISI.

For almost all the recommendations in the action plan, problematising should be a diffuse activity across a very broad range of actors, problem contexts, stakeholders, and technologies – putting choice into the hands of people best able to decide for themselves the scope of adoption of AI. By all means give organisations, and individuals, the processes that would enable them to make informed decisions, but these are not imposed ‘flexible regulation’ and ‘assurance tools’ that ultimately disempower.

- Callon, M. (1980). Struggles and Negotiations to Define What is Problematic and What is Not. In K. D. Knorr, R. Krohn, & R. Whitley (Eds.), The Social Process of Scientific Investigation (pp. 197-219). Springer Netherlands: Dordrecht. https://doi.org/10.1007/978-94-009-9109-5_8

- Clifford, M., & Department for Science Innovation and Technology. (2025). AI Opportunities Action Plan. London: HMSO Retrieved from https://www.gov.uk/government/publications/ai-opportunities-action-plan

- Yearworth, M. (2025). Problem Structuring: Methodology in Practice (1st ed.). John Wiley & Sons, Inc.: Hoboken. https://doi.org/10.1002/9781119744856